Being interested in the role of algorithms and their influence on everyday life, i started collecting examples under the #curiousrituals hashtag. Perhaps it's a follow-up to Curious Rituals, perhaps it isn't.

Some intriguing cases I've found recently (most of them comes from the non-scientific press, as i'm focusing on everyday life):

Music generated with script and played by fake users get a good ranking on online music charts

"Melbourne hacker and payments security professional Peter Filimore, who, it should be mentioned, cannot play or sing a single note, managed to accrue $1,000 in royalties and knock artists like Pink, Nicki Minaj, and Flume off high spots in online music charts through the use of bots.

In an effort to uncover security flaws in online streaming services like Spotify, Filimore decided to send “garbage” tunes to the top of the charts and generate royalties in the process. Filimore started by using algorithms to compile public domain audio and splicing cheesy MIDI tracks together.

Filimore then purchased three Amazon-linked compute instances — virtual servers that are able to run applications — and created a simple hacking script to simulate three listeners playing his songs 24-hours a day for a month, while accruing reviews that described his music as “rubbish.”

[...]

Filimore also explained that using a larger cluster of computing instances could potentially generate thousands of dollars in fraudulent royalties. "

Are Face-Detection Cameras Racist?

"When Joz Wang and her brother bought their mom a Nikon Coolpix S630 digital camera for Mother's Day last year, they discovered what seemed to be a malfunction. Every time they took a portrait of each other smiling, a message flashed across the screen asking, "Did someone blink?" No one had. "I thought the camera was broken!" Wang, 33, recalls. But when her brother posed with his eyes open so wide that he looked "bug-eyed," the messages stopped.

Wang, a Taiwanese-American strategy consultant who goes by the Web handle "jozjozjoz," thought it was funny that the camera had difficulties figuring out when her family had their eyes open. So she posted a photo of the blink warning on her blog under the title, "Racist Camera! No, I did not blink... I'm just Asian!""

Bot wars - The arms race of restaurant reservations in SF

"After a while of running this script I had captured a good amount of data. One day I found myself looking at it and noticed that as soon as reservations became available on the website (at 4am), all the good times were immediately taken and were gone by 4:01am. It quickly became obvious that these were reservation bots at work. [...] You fight fire with fire, so I made my own reservation bot. You can get the code here.

I used mechanize to create a simple ruby script that goes through the process of checking for available reservations (in a given time range) and making a reservation under your name.

With this script I was able to start getting reservations again, but I know that this bot war will continue to escalate."

"Scans made by some Xerox copiers are changing numbers on documents, a German computer scientist has discovered. [...] He said the anomaly is caused by Jbig2, an image compression standard. Image compression is typically used to make file sizes smaller. Jbig2 would substitute figures it thought were the same, meaning similar numbers were being wrongly swapped."

Google’s autocompletion: algorithms, stereotypes and accountability

You might have come across the latest UN Women awareness campaign. Originally in print, it has been spreading online for almost two days. It shows four women, each “silenced” with a screenshot from a particular Google search and its respective suggested autocompletions. [...] Guess what was the most common reaction of people?

They headed over to Google in order to check the “veracity” of the screenshots, and test the suggested autocompletions for a search for “Women should …” and other expressions.

This awareness campaign has been very successful in making people more aware of the sexism in our world Google’s autocomplete function.

When Roommates Were Random

As soon as today’s students receive their proverbial fat envelope from their top choice college, they are on Facebook meeting other potential freshmen. They are on sites like roomsurf.com and roomsync.com, scoping out prospective friends. By the time the roommate application forms arrive, many like-minded students with similar backgrounds have already connected and agreed to request one another.

It’s just one of many ways in which digital technologies now spill over into non-screen-based aspects of social experience. I know certain people who can’t bear to eat in a restaurant they haven’t researched on Yelp. And Google now tailors searches to exactly what it thinks you want to find.

But this loss of randomness is particularly unfortunate for college-age students, who should be trying on new hats and getting exposed to new and different ideas. Which students end up bunking with whom may seem trivial at first glance. But research on the phenomenon of peer influence — and the influences of roommates in particular — has found that there are, in fact, long-lasting effects of whom you end up living with your first year.

Why do I blog this? I've started collecting examples like this in the past few months. Might be the beginning of something, you never know. What I find intriguing here is that there are various types of influences for such algorithms: sometimes it's a "framing" where the user's agency is limited (the racist camera for instance), sometimes it's not (you're not forced to use recommendation engines). I'm thinking about building a typology perhaps, collecting these, talking to people, there's a whole list to be built

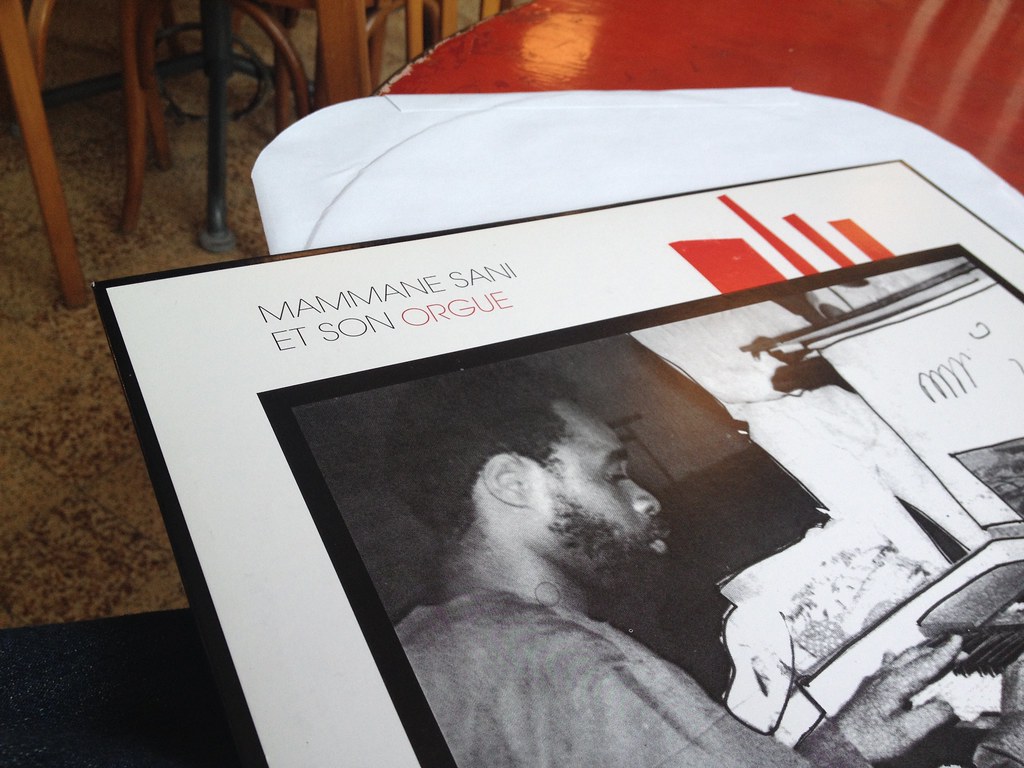

Saw that cover yesterday at Bongo Joe records, a café/record shop in Geneva. It's interesting to see that, for once, the artist AND its instrument are mentioned on the cover. "

Saw that cover yesterday at Bongo Joe records, a café/record shop in Geneva. It's interesting to see that, for once, the artist AND its instrument are mentioned on the cover. " If you enter

If you enter