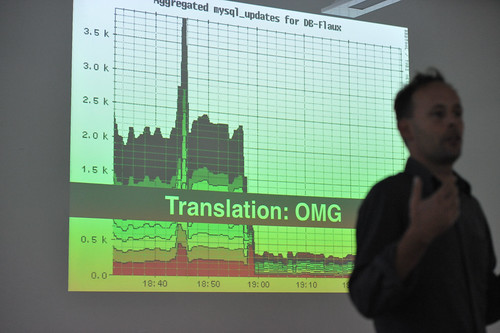

For no particular reason — perhaps a salute to Nicolas who will be presenting his work on design for failure at IxDA this week — I bring you this image taken during DE2008 in which Aaron Straup Cope discusses designing engineering systems with failure contingency as the critical path.

Why do I blog this? I find this perspective intriguing — it assumes system meltdown, anticipates it and delivers appropriate data to indicate when it might happen. If I remember correctly, there is no specific interest in being exact about failure, just that it will happen and you might be told roughly how long until it happens. So the effort is to help stave it off by various means — adding more servers to spread activity loads around, optimize queries, increase caching, whatever you need to do. This makes me think of the intractability of designing for deletion. If someone wants to extricate themselves from the databases of a service or system, there is almost certainly no quick and easy way — in fact, I doubt there is anyway at all, and most services are not obligated to handle these situations. If I told Google that I wanted to check out fully and completely, even if they wanted to do this, it is doubtful they could. Would someone have to run through all the backup *whatever — tapes? — wherever they may be? It’s not just the live systems, and its not just purging caches and so on. All of our data is on its own, like orphaned snapshots of moments in our lives, somewhere. I don’t necessarily find this chilling or anything like that. I’m just curious about this notion — designing for intractable, ugly, messy circumstances, like failure or deletion. Things that run counter to the intuition — we usually design for the beautiful, full, glorious 32-bit conditions.